The Use of Data in Creating, Implementing, and Assessing Evidence-based Pedagogies

Jayson Nissen, California State University Chico

James Day, University of British Columbia

Paula Heron, University of Washington

Physics education researchers often use statistics to develop insights into student learning and attitudes in physics courses, and as a guide in developing instructional strategies. Educators rely on knowledge of statistics to understand research articles, identify effective practices to use, and evaluate the effectiveness of their courses. While an education in physics provides robust opportunities to develop mathematical efficacy, it rarely develops the specialized knowledge necessary to conduct or consume the wide range of statistics used in education research.

To help educators and researchers extend their statistical literacy, we ran a workshop on issues surrounding p-values. “A p-value measures whether an observed result can be attributed to chance. But it cannot answer the researcher's real question: what are the odds that it is correct?”1 We focused on p-values because researchers commonly misuse and misinterpret them.2

To address the misuse and misinterpretation of p-values, the American Statistical Association (ASA) released a statement that included six principles for using p-values; our workshop addressed four of these.

We first looked at choices that make p-values useful (or not), addressing principles three and four from the ASA’s statement: (3) scientific conclusions and business or policy decisions should not be based only on whether a p-value passes a specific threshold, and (4) proper inference requires full reporting and transparency.

We asked participants to read False-Positive Psychology: Undisclosed Flexibility in Data Collection and Analysis Allows Presenting Anything as Significant3 before the workshop. The article presents two studies with statistically significant findings that listening to a children’s song makes people feel older and that listening to a song about old age makes people actually younger. They conclude that the first result was unlikely, and the second was necessarily false. Using simulations, the authors show that researchers’ choices make it likely that false-positives are common in the research literature. The article closes by providing guidelines for authors and reviewers to minimize the likelihood of reporting false-positive results.

After discussing the article, participants explored the effects of p-hacking using the Hack Your Way to Scientific Glory tool at https://fivethirtyeight.com/features/science-isnt-broken/. The tool models the statistical relationship between the political affiliation of elected officials and economic outcomes. Depending on which variables were chosen for the analysis, participants could demonstrate that either Republican or Democrat affiliation had a statistically significant correlation with either higher or lower economic outcomes. The article and the p-hacking activity illustrate the broad set of choices that researchers face in using statistics and highlight how some choices can lead to unreliable results.

Next we looked at the additional information needed to interpret a p-value, addressing principles five and six from the ASA’s statement: (5) a p-value, or statistical significance, does not measure the size of an effect or the importance of a result, and (6) by itself, a p-value does not provide a good measure of evidence regarding a model or hypothesis.

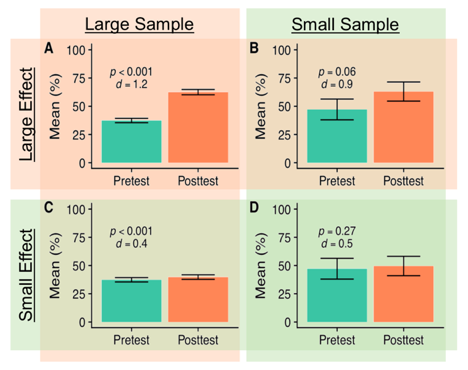

To address the need for additional measures to inform conclusions drawn from statistical tests, participants used four contrasting cases of pretest and posttest data to explore the relationships between effect size, statistical significance, and sample size. The effect size was calculated using Cohen’s d, which is the difference in the means divided by the pooled standard deviation, with a Hedge’s correction for the sample size. The p-value was calculated with a matched-samples, two-tailed t-test. Figure 1 contains four bar graphs with error bars showing one standard error. The columns in Figure 1 have different sample sizes and the rows have different effect sizes. Figure 1.B reports a large effect size, d = 0.9, that was not statistically significant, while Figure 1.C reports a small effect size, d = 0.4, that was statistically significant. Together, 1.B and 1.C illustrate how a p-value alone cannot determine the educational significance of a result.

Figure 1. Bar plots comparing the pretest and posttest scores in four courses. The sample size (N) was 100 in the left column and 6 in the right column. The effect size was large in the top row and small in the bottom row. Comparing B and C shows that a large effect may lack statistical significance, while a small effect may have statistical significance.

While developing the workshop, we collected resources on quantitative methods. These resources are publicly available at https://tinyurl.com/statworkshopresources and are organized into six topics:

- Popular media, which covers blogs, books, and podcasts that discuss various issues of statistical analysis.

- Broad resources for conducting statistical analyses.

- Resources on p-values and effect sizes.

- Data visualizations.

- Resources for preregistering studies.

- Other fundamentals.

We invite readers to review and comment on the materials and to recommend new materials.

This workshop is part of a collaborative effort to support new and emerging quantitative researchers in discipline-based education research in developing their statistical literacy. Following the workshop at Foundations and Frontiers in Physics Education Research – Puget Sound, a similar workshop ran at the 2018 Physics Education Research Conference. The team is working on a proposal for a workshop at the 2019 National Association for Research on Science Teaching and will run a workshop at the 2019 American Association of Physics Teachers Summer Meeting.

Jayson Nissen is a Postdoctoral Researcher in the Department of Science Education at California State University - Chico.

James Day is a Research Associate for the Stewart Blusson Quantum Matter Institute at the University of British Columbia.

Paula Heron is a professor of physics at the University of Washington, where she is a member of the Physics Education Group.

(Endnotes)

1. R. Nuzzo, “Scientific method: statistical errors,” Nature News, 506(7487), 150 (2014).

2. R. L. Wasserstein & N. A. Lazar, “The ASA’s statement on p-values: context, process, and purpose,” The American Statistician, 70(2), 129-133 (2016).

3. J. P. Simmons, L. D. Nelson, & U. Simonsohn, “False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant,” Psychological science, 22(11), 1359-1366 (2011).

Disclaimer – The articles and opinion pieces found in this issue of the APS Forum on Education Newsletter are not peer refereed and represent solely the views of the authors and not necessarily the views of the APS.